Challenges

ActEV Self-Reported Leaderboard (SRL)

The ActEV Self-Reported Leaderboard (SRL) Challenge is a self-reported leaderboard (take-home) evaluation; participants download a ActEV SRL testset, run their activity detection algorithms on the test set using their own hardware platforms, and then submit their system output to the evaluation server for scoring results, we will invite the top two teams to give oral presentations at the CVPR’22 ActivityNet workshop.

SoccerNet Challenge

The SoccerNet challenges are back! We are proud to announce new soccer video challenges at CVPR 2022, including (i) Action Spotting and Replay Grounding, (ii) Camera Calibration and Field Localization, (iii) Player Re-Identification and (iv) Ball and Player Tracking. Feel free to check out our presentation video for more details. The challenges end on the 30th of May 2022. Each challenge has a 1000$ prize sponsored by EVS Broadcast Equipment, SportRadar and Baidu Research. Latest news including leaderboards will be shared throughout the competition on our discord server.

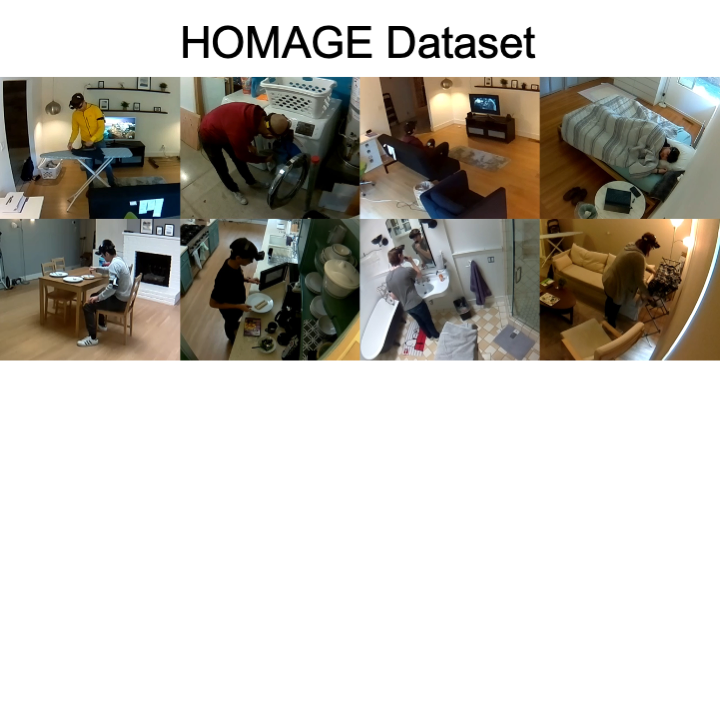

HOMAGE

This challenge leverages the Home Action Genome dataset, which contains multi-view videos of indoor daily activities. We use scene graphs to describe the relationship between a person and the object used during the execution of an action. In this track, the algorithms need to predict per-frame scene graphs, including how they change as the video progresses.