Program

| Time |

Talk |

Slides |

Memories |

| 14:00 |

Bernard Ghanem: Opening remarks |

Slides |

|

| 14:15 |

Joao Carreira: Video Understanding and the Kinetics Dataset |

Slides |

|

| 14:45 |

Jitendra Malik: AVA: A Video Dataset of Atomic Visual Actions |

Slides |

|

| 15:15 |

Huijuan Xu: R-C3D: Region-Convolutional 3D Network for Activity Detection (Most Innovative) |

Slides |

|

| 15:35 |

Bernard Ghanem: Task1: Untrimmed Video Classification (Dataset and Results) |

Slides |

|

| 15:45 |

Afternoon Break |

| 16:15 |

Jiankang Deng: Improve Untrimmed Video Classification and Action Localization by Human and Object Tubelets (Winner Task 1) |

Slides |

|

| 16:35 |

Brian Zhang: Task2: Trimmed Action Recognition (Dataset and Results) |

Slides |

|

| 16:45 |

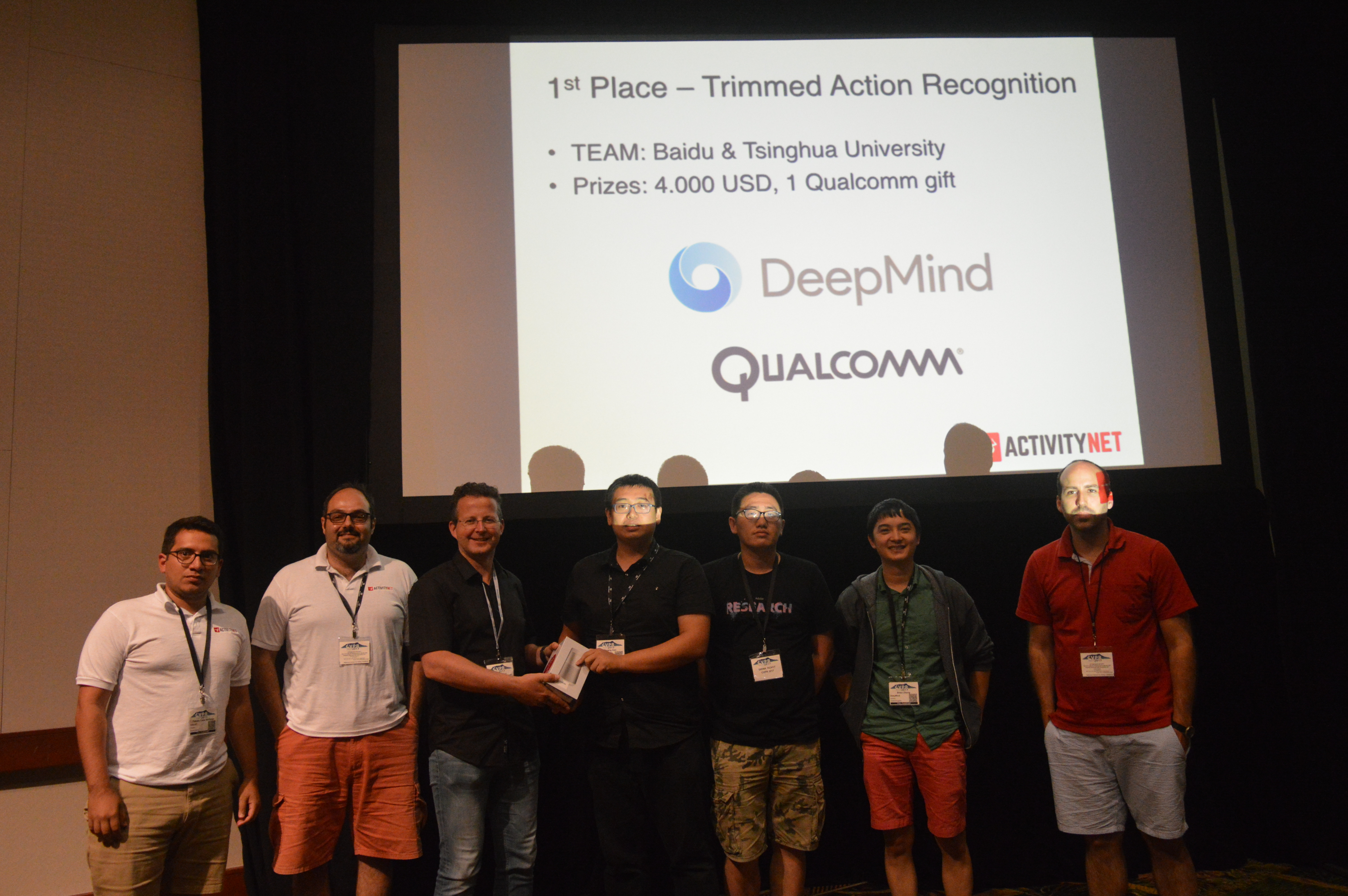

Chuang Gan: Revisiting the Effectiveness of Off-the-shelf Temporal Modeling Approaches for Video Recognition (Winner Task 2) |

Slides |

|

| 17:05 |

Fabian Caba: Task3&4: Temporal Action Proposals and Localization (Dataset and Results) |

Slides |

|

| 17:20 |

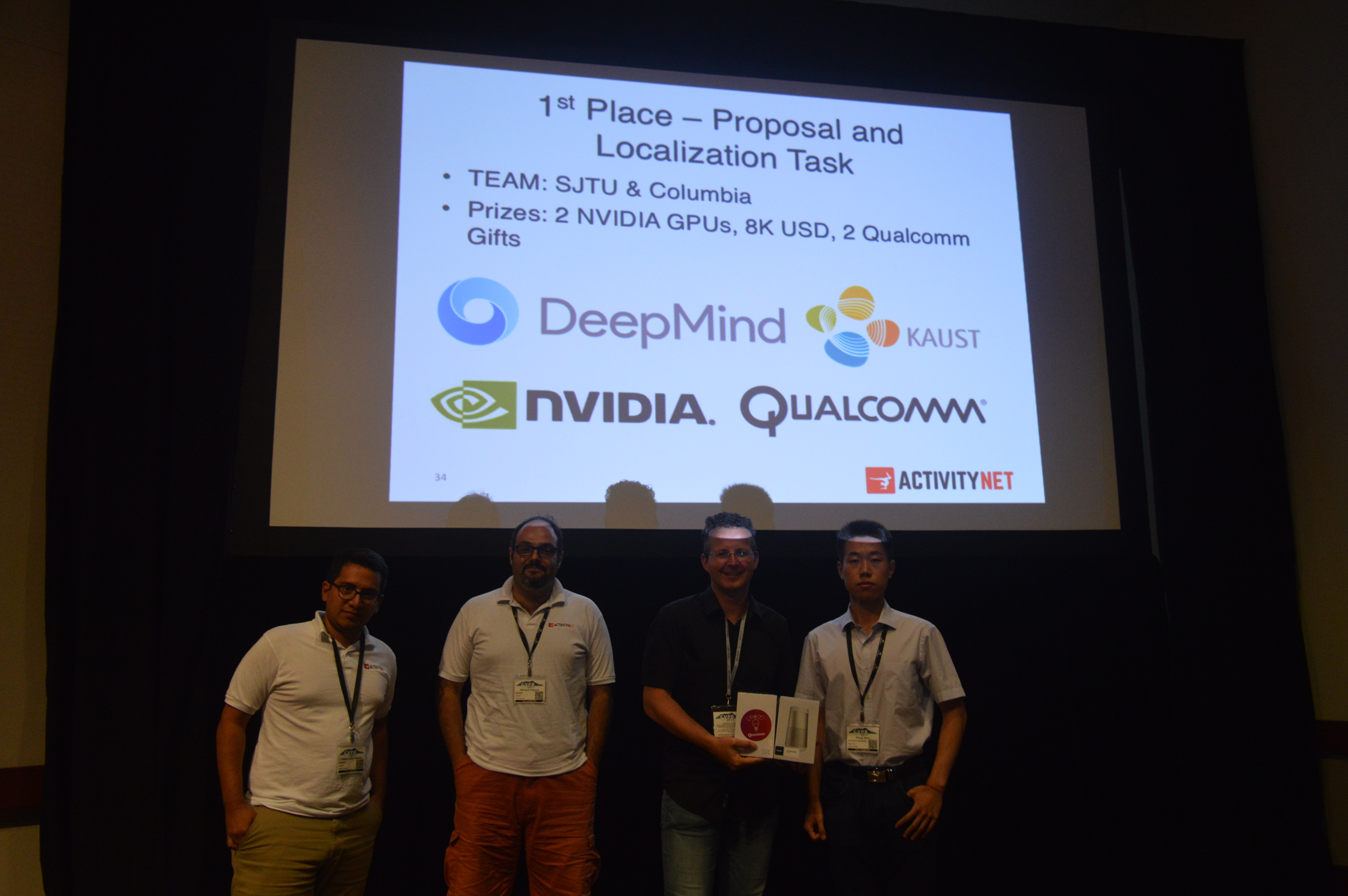

Zheng Shou: Temporal Convolution Based Action Proposal: Submission to ActivityNet 2017 (Winner Task3&4) |

Slides |

|

| 17:40 |

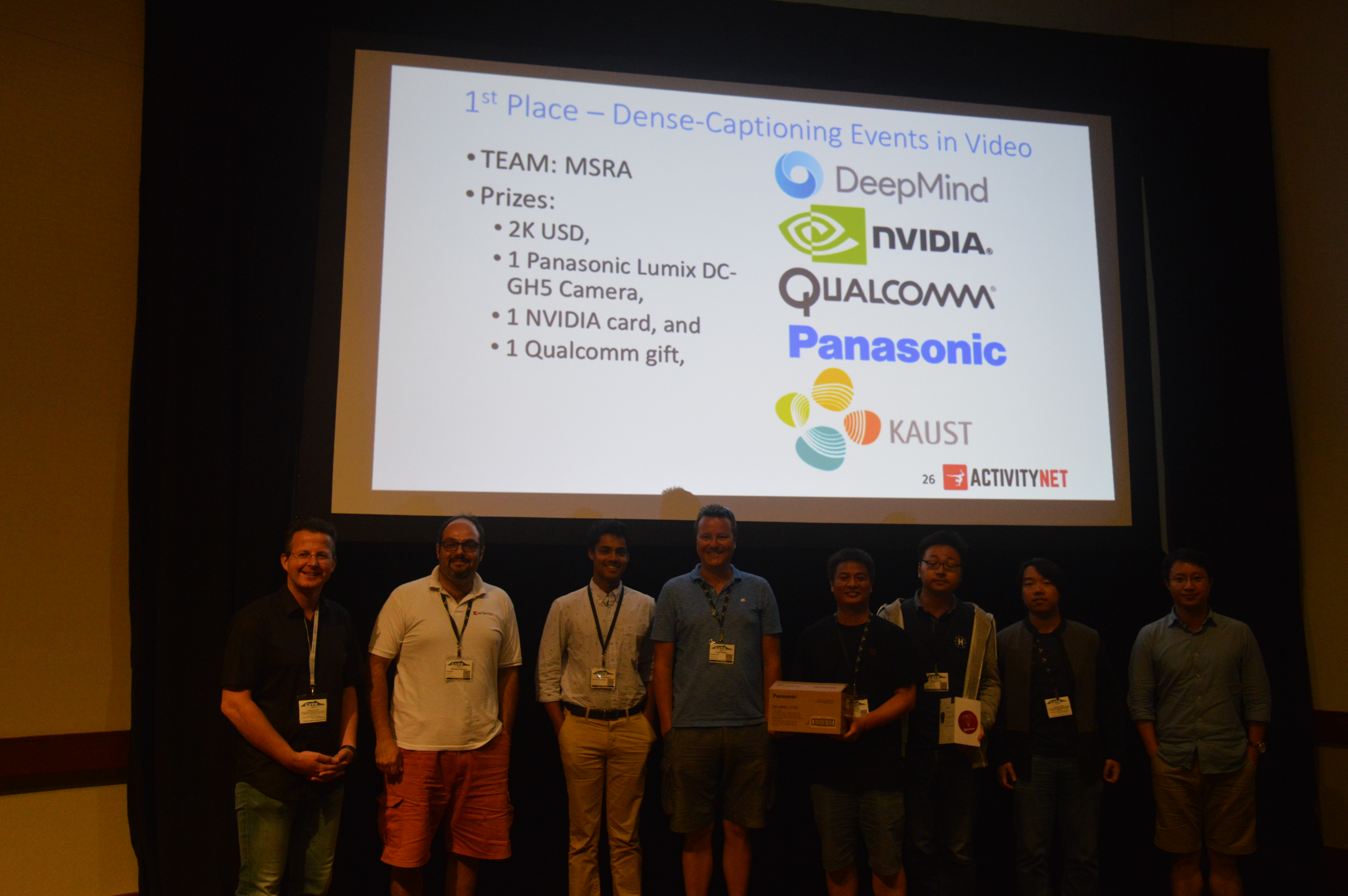

Ranjay Krishna: Task5: Dense-Captioning Events in Videos (Dataset and Results) |

Slides |

|

| 17:50 |

Ting Yao: ActivityNet Challenge 2017: Dense-Captioning Event in Videos (Winner Task 5) |

Slides |

|

| 16:10 |

Bernard Ghanem: Closing Remarks |

Slides |

|

Important Dates

May 1, 2017: Development kit and data will be available.

June 12, 2017: Test subsets will be available.

June 19, 2017: Evaluation server will be available.

July 5, 2017: Leaderboard will be public.

July 9, 2017 July 16, 2017 (11:59 PM Hawaii-Aleutian Time Zone): Challenge submission deadline.

July 11, 2017 July 18, 2017 (11:59 PM Hawaii-Aleutian Time Zone): Paper submission deadline.

July 12, 2017 July 19, 2017 (11:59 PM Hawaii-Aleutian Time Zone): Winners announcement.

July 26, 2017: Workshop session.