Papers

What is ActivityNet?

ActivityNet is a new large-scale video benchmark for human activity understanding. ActivityNet aims at covering a wide range of complex human activities that are of interest to people in their daily living. In its current version, ActivityNet provides samples from 203 activity classes with an average of 137 untrimmed videos per class and 1.41 activity instances per video, for a total of 849 video hours.

How was ActivityNet made?

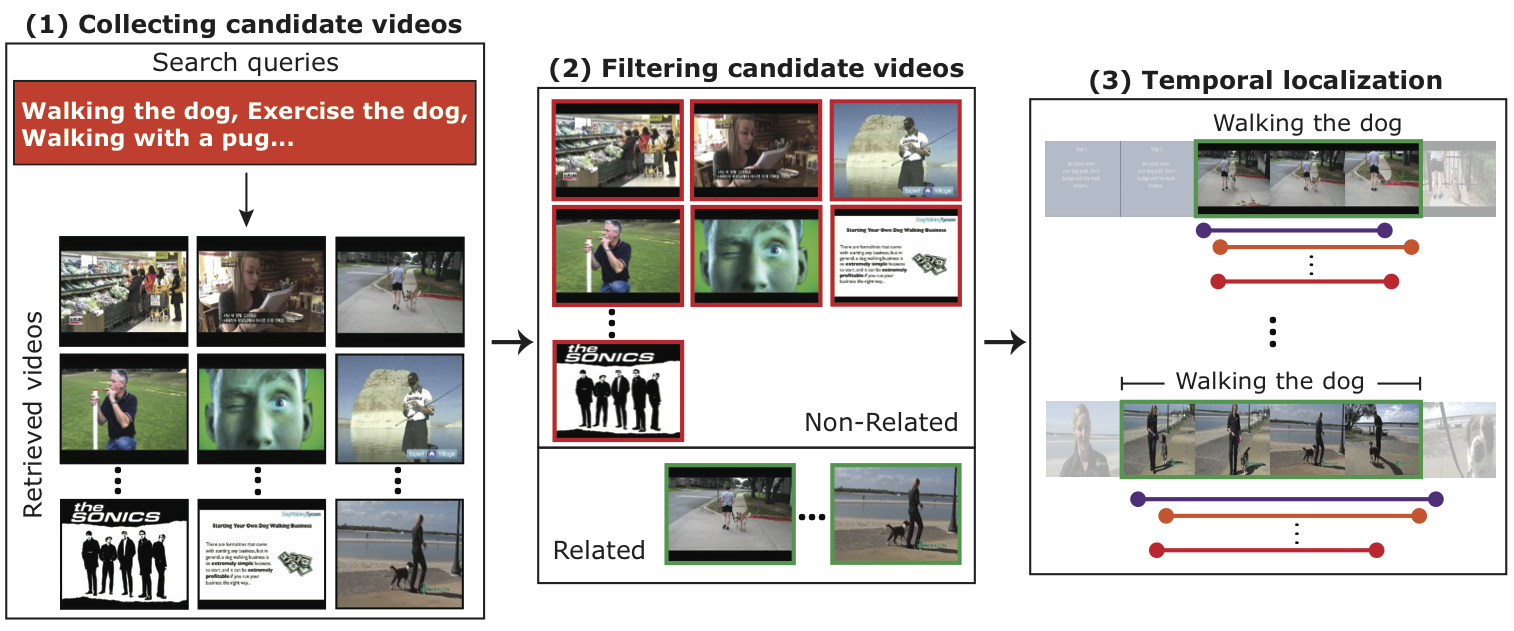

We heavily rely on the crowd and specifically, Amazon Mechanical Turk, to help acquire and annotate ActivityNet. Our acquisition pipeline has three main steps: Collection, Filtering, and Temporal Localization.

- Collecting candidate videos: We search candidate videos in the web for each ActivityNet category (including expanded queries)

- Filtering candidate videos: Retrieved videos are verified by Amazon Mechanical Turk workers and clean out those videos which are not related with any intended activity.

- Temporal localization: Temporal localization provides starting and ending times in which the activity is performed (multiple Amazon Mechanical Turk workers annotate a single video)

See our ICMR 2014 paper for detailed information. Fabian Caba Heilbron and Juan Carlos Niebles. Collecting and Annotating Human Activities in Web Videos. In ICMR, 2014.